Production K8s, one year on.

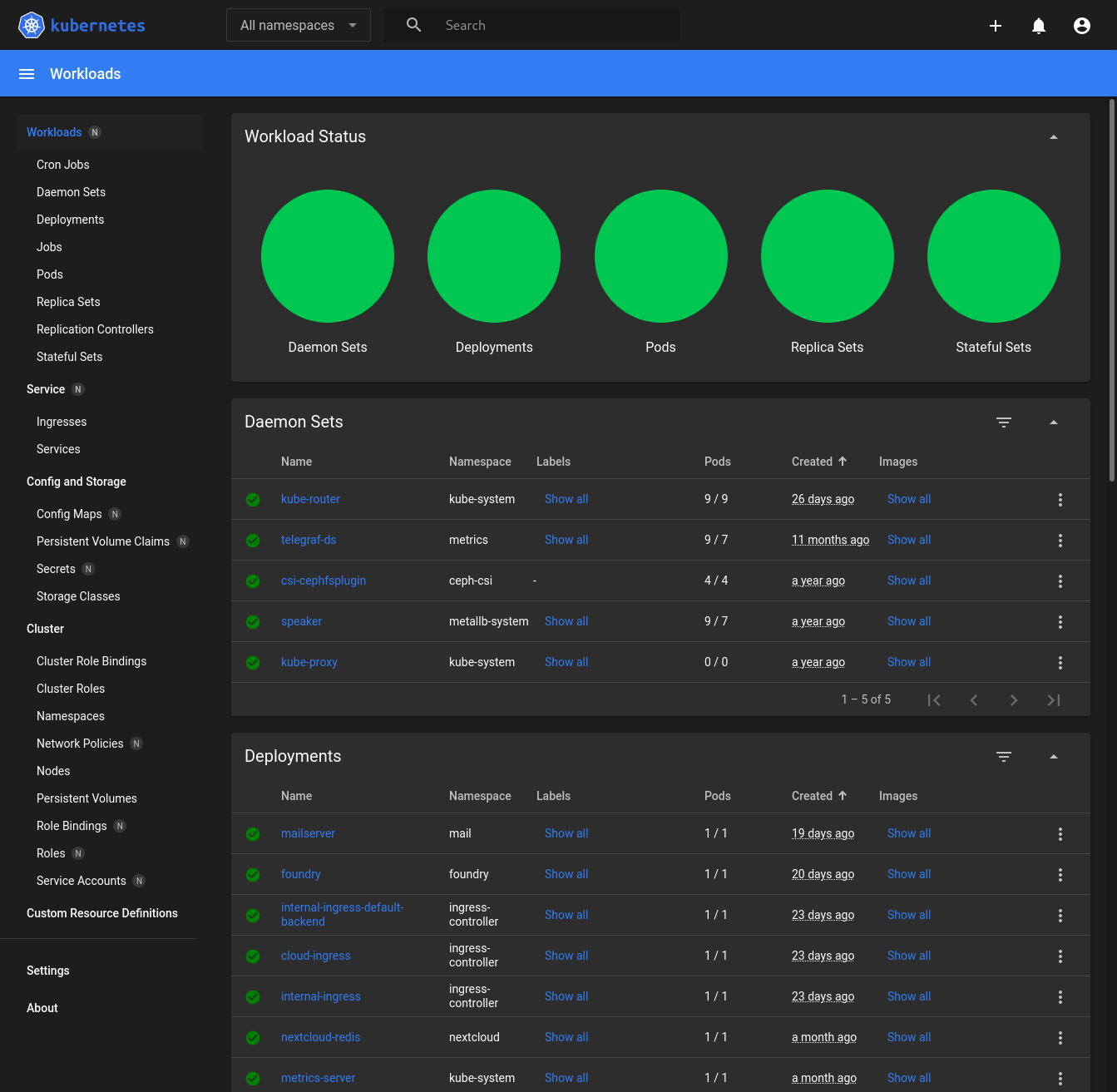

It's been one year since I deployed hydra, my production Kubernetes cluster.

This post is going to be a write-up of the things I did right, and the things I did wrong, warts and all. I'll close off by talking about my plans for the future.

An unhealthy pod is a dead pod

In the old world, if I deployed a virtual machine, or a container; they would become my pet. It was my responsibility to manage, it was my responsibility to treat with care and affection. I gave it a name and I wouldn't dare delete it. In Kubernetes, that's not how things work. The 'closest' [1] analogue to a container is a Pod. How do you restart the pod if the process fails to start properly? You don't.

Pods get removed. They get deleted. They're not pets anymore, not here.

There isn't time to name your pets. They're cattle now. If one cow dies, whatever. If the whole herd does? you've got a problem. This kind of thinking is what I had to switch to when I moved to Kubernetes. You don't restart pods. You destroy them.

I think the greatest realization of this was when I lost my lovingly crafted dashboards because I deleted a pod.

This has greater implications than just deleting pods. Any changes you want to make permanent? It needs to be mutable either with a PVC or Configmap or whatever. This forces you to think more carefully about what files and settings are important, because if you just mount the entire container as a PVC, you won't be able to reap the benefits of updating. If you don't mount the right files, when the pod gets deleted, you've lost those changes forever. This kind of understanding is made a lot easier with documentation from the developers of the service you're deploying; but sometimes you find that some edge cases (my Nextcloud deployment has some CM mounts in the /etc dir to increase the PHP filesize, for example).

[1] One could probably argue that a container (within a pod) is the closest to a container within docker, but a pod is the lowest 'level' one works within Kubernetes.

Get your secrets right from the start

Secrets, API keys, passwords, all of that. It's sensitive (obviously). Make sure the management of secrets is done in a sane way from day 0. I made the mistake of deploying stuff using secrets stored in git. While secrets aren't encrypted in Kubernetes[2], it's an incredibly easy method of attack if your secrets are stored in CI.

This is the biggest criticism I can make of my own infrastrucutre. I didn't do secrets properly, and as a result they're scattered about within my hydra repo. Anyone that gets granted access to the repo, gets the keys to the kingdom.

One of the tasks on my TODO list is to remove the secrets entirely; but it's something I should have avoided from the start.

Status checking is only as good as the check

Status checking provides a great insight into the health of a cluster, from a user perspective. It's a nice, blackbox way of saying "Is everything running fine?".

The way my network and DNS is designed means that internally my services can function absolutely fine; but externally it's fucked. This makes status checking a little bit difficult, since I can't rely on checking from within Kubernetes.

To solve this, I use uptime robot; it's not ideal since there's a pretty hefty delay between knowing the status of something, and uptime robot updating.

That being said, having these checks is still useful. Knowing the status of your estate is one of the most important things to have when you're running distributed services.

Use a dedicated OS for the cluster

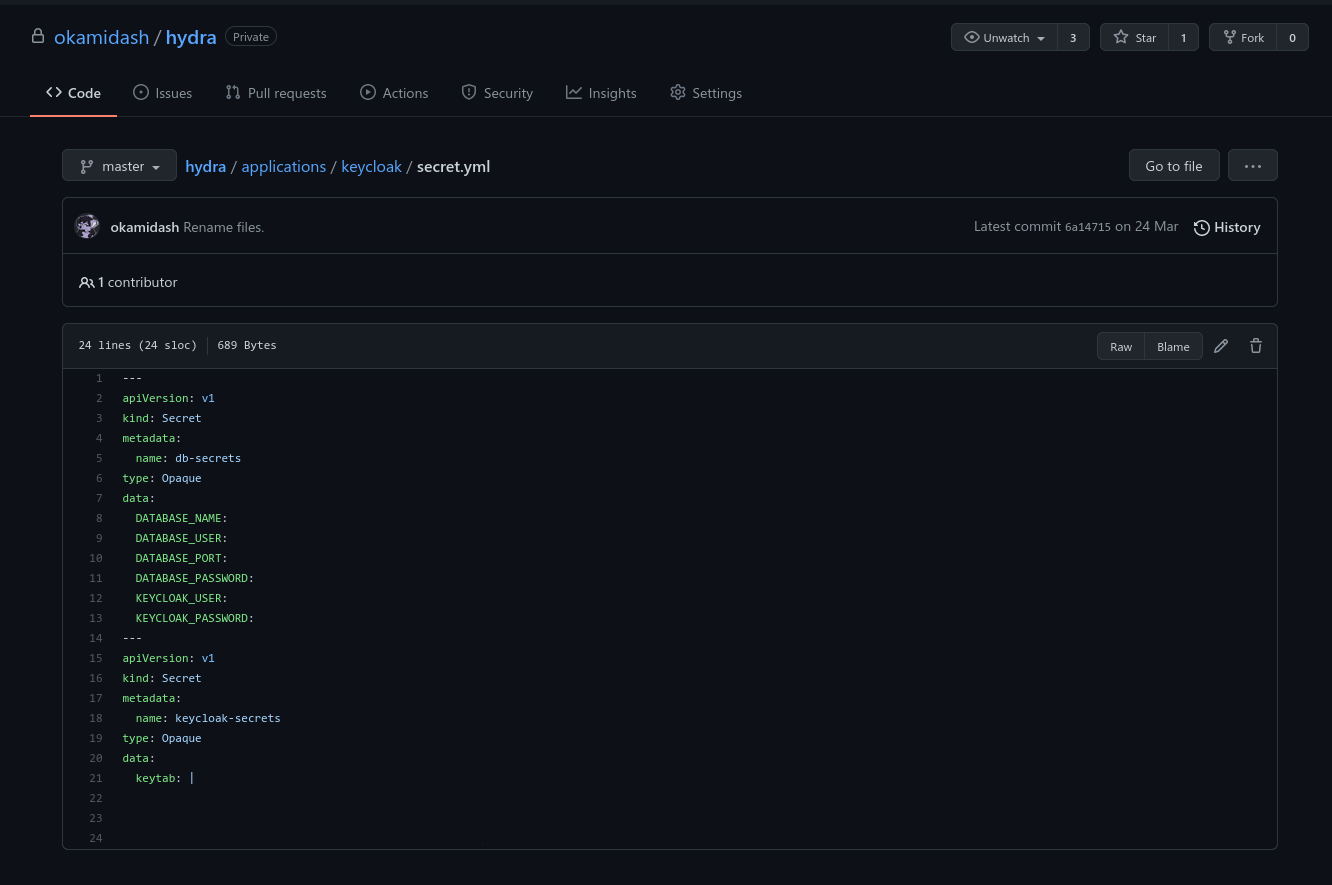

Currently my nodes use a mixture of OpenSuse Kubic and Fedora. I started with Fedora, and over time i've transitioned to Kubic; due to the ease of use.

Ease of use? What do you mean?

Think about maintaining a virtual machine. Keeping it updated. Making sure that config doesn't change. Making modifications. Now multiply that by 9. It's not fun. Even using tools like Ansible to automate most of it. Especially on version based operating systems that release fairly frequently.

Using a dedicated operating system for the job that's purpose built for Kubernetes has saved a number of headaches.

I don't need to worry about cgroup configuration, repo setup, all of that. It's not a particularly easy task to prepare a node for use in a cluster, and maintaining the OS on top of the cluster itself isn't a particularly fun thing either. Keeping Fedora nodes in the cluster makes sense if your nodes are pets, since you can afford to maintain them. My nodes aren't pets. They're cattle. They're part of the system.

Avoid dependency locks

When building distributed systems, you will have dependencies. Don't make those dependencies circular. I intentionally built my cluster to avoid these circular locks.

Example (albeit not a great one):

You set your controlplane endpoint to be 'api.example.com', and put the IP in /etc/hosts temporarily.

You deploy the cluster, and then deploy the DNS that provides the NS authority for 'example.com'.

You're then relying on the DNS being hosted by Kubernetes, to provide Kubernetes critical function.

Don't do this. It will result in something being cluster-external; but that's a tradeoff that is absolutely worth it. Soft dependencies are easier to deal with, such as deploying a CI system to deploy changes to the cluster itself; as you can still do things manually if it fails.

It never stops

As is the case in a DevOps world, the work never stops. It will never be done. The mental laundry list of things I want to change and fix grows bigger with every day. That's the reality of Kubernetes, and it's one I've learnt over the past year of maintaining a kubernetes cluster in production.

The future

My current stack sits ontop of oVirt; which is fantastic. I've noticed that the development is a bit slow, and a lot of resources has gone into other RH products; so i'm considering the replacements.

The one I'm planning (and currently developing); is a dual stack cluster. This is a Kubernetes in Kubernetes cluster using KubeVirt. Taking over the key points highlighted above, the thing I'm most eager to work with, is secrets. Once the design is more concrete, I'll write it up. Don't think anyone has gotten KinKy before :p