Ceph, etcd, and the Sync-hole

Okami delves into a somewhat-known feature on Enterprise SSDs, and shows how much of an impact that they can have on your cluster performance.

Ceph and Etcd (stylized as etcd) are both fairly important pieces in the puzzle that is the cloud landscape. Ceph provides distributed, fault tolerant storage. Ditto for etcd, except that it's a database. Both use some pretty interesting ways of ensuring data integrity that can have surprising performance implications when you don't watch the hardware you're using.

This blog post aims to explain what these implications are, where they matter, and give some proof about these implications. Oh, and also provide some pretty mail-delivering foxes.

How storage works (kinda)

Meet the storage fox. This storage fox is going to be our happy little metaphor to make explaining this a bit easier. Our storage fox delivers mail for us.

Lets say I write a letter (data) to my friend, Paul (the target storage device). I go and give this letter to the storage fox to deliver this mail. The storage fox gets a lot of these letters, so they chuck it into their bag (cache) to deliver at some point today. I can depend on the storage fox to deliver my letter; and I don't need to know when it gets delivered, just that it gets delivered at some point. This makes sending letters very quick, as I can just give it to the storage fox and continue with my day; knowing that the storage fox will get it to the destination.

There's a downside to keeping my mail in the bag. if Paul moves, or the fox gets hit by a truck, that mail isn't gonna get to the destination. It ends up lost.

This way of delivering mail is analogous to what asynchronous writes are within a storage system. I can tell the kernel to write some data, and it'll cache it (on the disk itself, maybe within RAM) while it's writing it to the final destination. My programs won't end up waiting for the storage operation to complete. This is great for situations where speed is key, and I don't need to guarantee it's been written. Asynchronous writes are also useful if I'm going to be reading that data back, as the cache is often much faster to read from. That being said, the downside rears it's ugly head when the system loses power suddenly, or the storage disappears.

If you remember "It is now safe to remove the USB" in Windows, this is exactly why.

Now, lets say that I've got a very important message to send to Paul. It's a cute cat photo I found on the internet, and I can't wait to see Paul's reaction. I give it to the storage fox, and I tell the fox to deliver this mail right away and tell me to come straight back when it's been delivered. This means I spend the entire time waiting for that mail to get to it's destination, but I will know that it's been delivered or not. Unfortunately that entire transaction takes much longer to complete, as I'm now waiting for the fox to deliver the message, instead of carrying on with my day.

That's how a synchronous write works. I tell the kernel to ignore any caches and tell the storage target to confirm that the data has been fully written. Once I get that confirmation, I know that I can endure power loss, or unplug the device, and nothing will be lost. Synchronously writing data is slow though. If you've noticed transfer speeds suddenly drop off a cliff when writing large files, you've exhausted the cache and are now writing directly to the disk.

Why does that matter?

Writing data synchronously vs asynchronously is important. Writing asynchronously means you can achieve very fast IO per second (IOPS), but you haven't fully committed the data until another point later in time. Writing synchronously on the other hand is much slower; but you guarantee that a write is actually written. Most of the time, you don't really need to care, unless you're writing Databases or similar. This is where Ceph and etcd come in.

Ceph and Etcd like safe data.

Moving on from the fox drawings, it's time to talk about Ceph and Etcd. They both write data synchronously... sorta. They use a concept known as write-ahead logging (WAL). This is essentially a log of what's going to get written somewhere. Data is first committed to the WAL (synchronously), and then to the persistent storage.

It's sorta like a cache, but fully explaining it (along with it's benefits) are outside the scope of this post. All you really need to be concerned with, is that the WAL is always written synchronously to ensure data integrity. This kills performance on a lot of drives; as they're most commonly built with asynchronous performance in mind.

Alright, I guess I get it now? Ceph and Etcd are slow, because they use synchronous writes, and synchronous writes are slow?

Storage can lie, and that's sometimes good.

This is the heart of why I wrote this blog post. You can get your consumer storage devices; and enterprise drives. While consumer drives are measured in terms of raw speed; enterprise drives are measured in features and reliability.

Lets take a look at 2 drives, for example.

The first is a fairly standard Consumer drive, the Samsung 970 EVO Plus. This drive in particular is advertised as having sequential write speeds of 2300MB/s, and sequential read speeds of 3500MB/s.

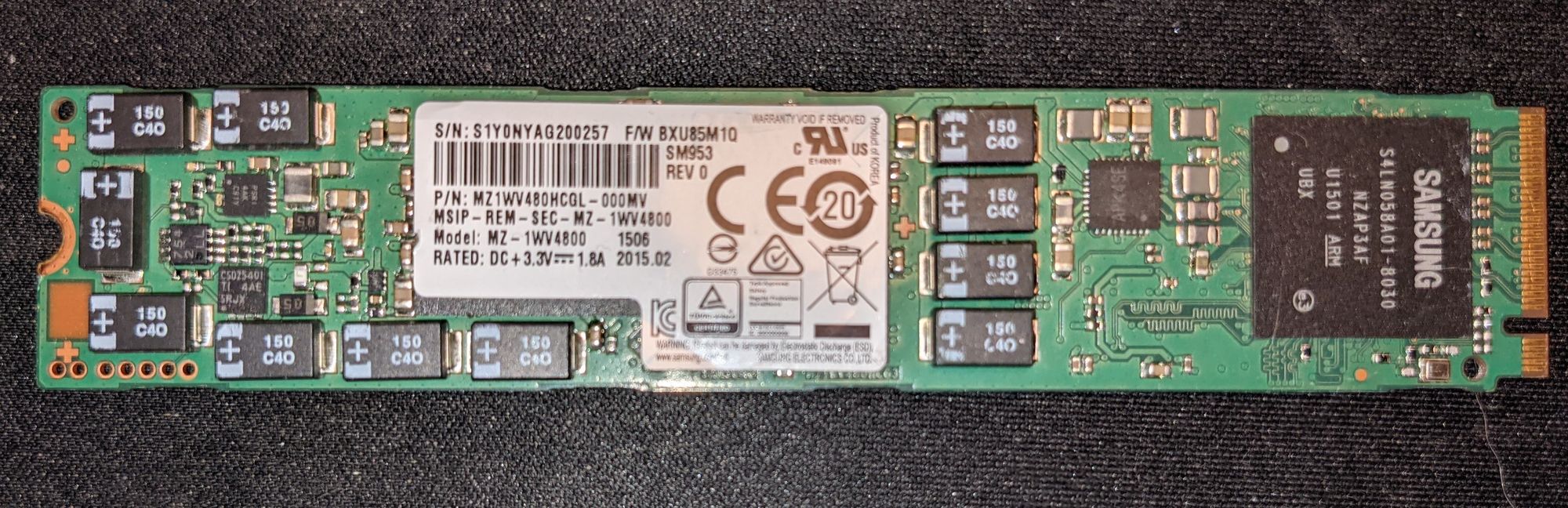

The second drive is a Samsung SM953. With quoted speeds of 1,750 and 850 MB/s (read and write sequentially).

By any aspect, the 970 EVO should be faster, right? It's advertised as being faster.

When writing synchronously, directly to the disk and bypassing the cache; the SM953 is way faster. This is because it lies.

In the above picture, the SM953 has little black boxes marked with the plus (+) symbol. These are capacitors that provide the SSD with power in the case of a power outage.

Doesn't that mean that even with asynchronous writes, the data is safe?

Yep, and the engineers who make these kinds of drives know that. When you force a synchronous write to the drives with these capacitors on, these drives ignore that and treat it as if it were an asynchronous request. They lie that they're actually writing the data to the storage, since they can tolerate power loss. It's not 1:1 the same, but it's close enough to be negligible.

That makes these kinds of drives faster for applications like Ceph and Etcd; than traditionally 'faster' drives. This is because the WAL (cache) gets written synchronously, which these drives are really good at.

It's key to note that I will be referring to drives with the power loss protection feature as PLP drives.

One other thing that's worth nothing, is that enterprise drives are rated for a far higher level of endurance than typical SSDs. They often outlast their use case, so you can frequently see them on ebay for cheap; with only ~5% of their total life used.

The testing set-up

Testing this wasn't easy. I figured I could come out with metrics and say "x drive is 30x faster than another"; but that's really, really hard to actually prove. Different workloads are better with different drives.

To make things simple(r), I set out to to try and prove the following hypotheses:

Direct, synchronous writes are faster on drives with power loss protection

Ceph, and (to a lesser extent) etcd perform better on drives with power loss protection

Where 'faster' and 'better' can be considered as that higher IOPS, higher bandwidth and lower latency in a like-for-like comparison. This leaves some interpretation up to the reader, but I'm going to consider any comparison being beyond a 10% margin to be 'faster'.

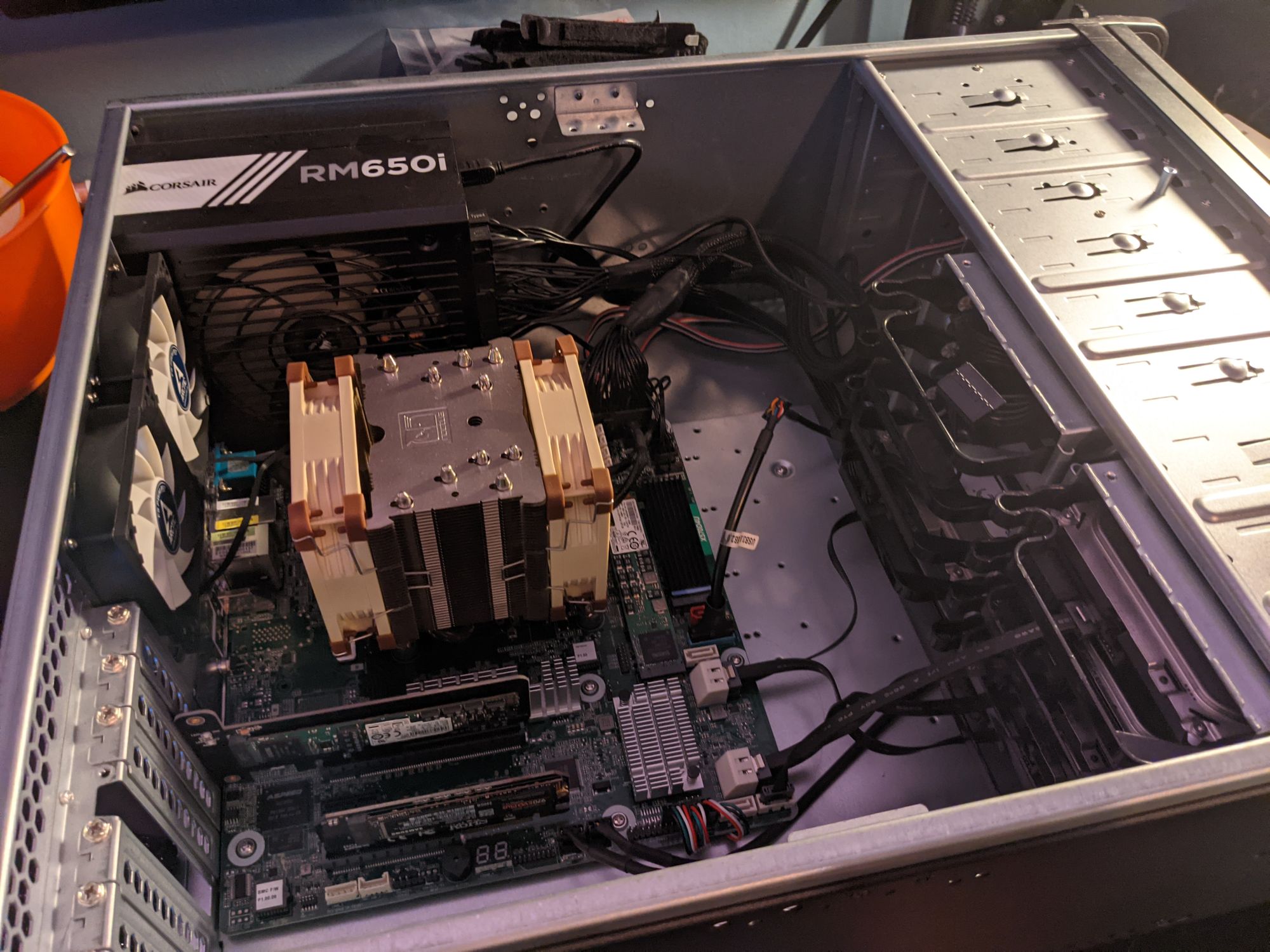

As I'm in the process of a cluster rebuild, I put one of my hypervisors to work being the testing platform.

To ensure that the tests are a like-for-like comparison, they are being run on the same host, with the same OS, the same packages and mostly the same variables.

One of the considerations I had when thinking about how to test this, was whether the host IO (i.e the install of the OS) could negatively affect performance. To solve this, I'm running Alpine Linux entirely in memory. This eliminates any considerations of 'host OS storage'; as it's just RAM.

I made a number of other considerations like the above, but the basic gist is that I tried my best to keep this as small, fast and fair as possible.

The plan was to run a set of 3 tests.

- Raw (FIO based) benchmark published by ETCD

- The etcd benchmarking tool, targetting a formatted filesystem

- A disk benchmarking tool intended for use in Kubernetes.

For each of these tests, I put 4 drives up against the task.

The four drives are as follows:

nvme0n1Samsung PM963 (960GB)nvme1n1Samsung 970 EVO Plus (500GB)nvme2n1Samsung SM953 (480GB)nvme3n1Samsung 970 EVO Plus (250GB)

The first (PM963), and third (SM953) drives have power loss protection.

These are the ones I was hoping to prove are faster.

Lets begin!

Test 1: FIO

After getting the OS booted, I ran FIO on each drive on a mounted directory under /mnt/.

This first test was looking at proving that the latency is lower on PLP drives.

This test was almost like-for-like copied from a post about etcd performance.[1]

The FIO command run was as follows:

export DISKNAME=nvme0n1

fio --rw=write --ioengine=sync --fdatasync=1 --directory=/mnt/${DISKNAME}p1/testdir --size=22m --loops=10 --bs=2300 --name=$DISKNAME(The referenced blog post goes into great depths talking about the WAL and etcd. I highly recommend checking it out.)

The results were promising.

Please note, for this graph, the results are not linear. They're logaritmic, as the difference between both sets of drives are massive. (~30 usec vs 785, for example)

Comparing the average sync latencies, you can see that the latency for each percentile of the test was lower on drives with power loss protection.

In the graphs (and all subsequent graphs), the PLP drives are the ones that begin with MZ.

Since the drives are able to lie about the disk writes, they're able to respond much quicker than the drives that remain truthful.

Test 2: Etcd

Moving on, I installed etcd onto the host, and launched it, using each drive as the target 'data' directory.

The actual command run was as follows:

export DISKNAME=nvme0n1

etcd --force-new-cluster --data-dir $ETCDDIR/data --log-outputs /var/log/${DISKNAME}

I then used the etcd benchmarking tool to run my tests on the freshly created etcd cluster.

benchmark \

--target-leader \

--conns=100 \

--clients=1000 \

put \

--key-size=8 \

--sequential-keys \

--total=100000 \

--val-size=256Since I wanted to really stress these drives, I opted for a higher number of connections, clients and keys; than is what's usually recommended for benchmarking.

The results are as follows:

From the 10% to the 75% percentile, the results look almost exactly the same. Essentially, there's almost no difference in 75% of writes. Beyond that is where you start to see a difference in results, with the PLP drives increasing in latency at a much more gradual pace than the non-PLP drives. The difference is nowhere near the same as the fio based tests, and I suspect that etcd may not be immediately syncing writes to disk, or that a bottleneck/slowdown might be elsewhere. I didn't want to dwell too far on this, as there's still a fairly clear difference in the higher percentiles, and I think that demonstrates 'better'.

Test 3: Ceph

Ceph was setup by running a single node Kuberetes cluster and deploying Rook onto it. From there, I was able to create one cluster for each drive fairly painlessly.

I built the cluster using the following Kubernetes components.

kubernetes deployment method: kubeadm

Container runtime interface: cri-o

Container runtime: crun

Network layer: kube-router (replacing kube-proxy)Once Ceph and rook was installed, I tinkered around with modifying the number of replicas and OSDs for each cluster; and ran tests for each change.

If you're totally new to Ceph, the replica count is the number of times one 'thing' will get written to a disk. A replica 3 setup will mean that 3 replicas of one 'thing' are stored when you issue a write.

An OSD, is a 'partition' of a disk. For faster drives, more OSDs can increase your performance. If you're new to Ceph, just think of it as different ways to configure your cluster. Some are faster (lower replicas), and some change how your data ends up on the disk (OSDs).

I ended up running 4 rounds of tests, which are as follows.

- 4 OSDs, 1 Replica

- 4 OSDs, 3 Replicas

- 5 OSD, 1 Replica

- 5 OSD, 3 Replicas

This would give me a fairly comprehensive set of data to work with, and also allow me to see how best to deploy things when the time comes to bring up my new infra.

Once I figured out the different cluster types I'd test, I decided to use the yasker/kbench image to benchmark, as it seems to give a pretty nice set of results. It also seems fairly 'purpose built' for Kubernetes, so this will hopefully give results that closely align with what I'd expect to see in a 'real world' deployment.

---

apiVersion: batch/v1

kind: Job

metadata:

name: fio

spec:

backoffLimit: 4

template:

spec:

restartPolicy: Never

containers:

- name: dbench

image: yasker/kbench:latest

imagePullPolicy: Always

# privilege needed to invalid the fs cache

securityContext:

privileged: true

env:

- name: SIZE

value: 20G

- name: FILE_NAME

value: /data/testfile

volumeMounts:

- name: disk

mountPath: /data

volumes:

- name: disk

persistentVolumeClaim:

claimName: fio

With that out of the way, lets look at the results.

Bandwidth

First up, is bandwidth. This is the amount of data that is able to get onto the disks, measured in Kilobytes per second.

These tests are for random writes. This is the sort of workload you'd encouter if you were updating a game, or your OS. Lots of files, in a number of different places. This is often where hard drives suck because they need to move the physical R/W head to find the data.

This graph displays the sequential writes over 4 different cluster configurations. A sequential write is essentially 'one after the other'. A real example of this would be streaming a movie.

In both cases, the power loss protection drives were far ahead of the competition. We can also see that the number of OSDs matter less in sequential writes, and this is due to the fact that 4 streams to the same drive vs 5 streams isn't going to matter when you're writing large files. What does seem to matter, is the number of replicas.

IOPS

IOPS is an important metric. It essentially dictates how many operations per second a drive can complete. This correlates with latency, as a higher latency will result in lower IOPS (and vice versa).

The random results above paint a similar picture to bandwidth. PLP drives are scoring far ahead of their counterparts. The same use cases apply for random and sequential; so a high random IOPs metric will mean your applications spend less time waiting.

Next, sequential IOPS. These also show that when writing sequentially, you're not going to encounter as much of a bottleneck when using between 4 and 5 OSDS.

So far, we have a 2/2 winning set landing solely at the PLP drives. Next up is latency.

Latency

Latency is a figure you want to keep low for a storage system. Much like how waiting in line for the poor employee to scan your meal deal feels bad; data waiting to be written feels bad.

Here we can see the latency (in nanoseconds) for the random writes is nearly 3x slower when writing to non PLP drives.

The same can be said for sequential writes.

That's 3 for 3. Looks like it's pretty clear that PLP drives are far better suited for Ceph, than non PLP drives.

Limitations

Before I get into the conclusion, it is worth raising a limiting factor in my testing.

I do not have a large sample set

This is true. I have only been testing on four drives. It's something I can't exactly get around without a research budget, and my spare salary is currently spent on other adventures. To combat this, I am going to be publishing all of my testing assets on Github, and if you have any spare time, please run the tests yourself and submit a pull request.

Conclusion

Storage is hard. There is a lot of information to take in, and I think it's important to note that I do not know all the answers. This isn't my specialty. I'm willing to accept that I am wrong on a number of these things.

Some key points to note having said that. These tests are a like-for-like comparison between drives. I attempted to control every condition that may have affected the performance otherwise. By all accounts, the testing I have performed is valid; but don't take it as gospel.

I wrote this blog post to prove 2 key points.

Direct, synchronous writes are faster on drives with power loss protection

I feel like I have been able to prove this fairly well. Looking at the results from section 1 (FIO), it's fairly clear that the drives perform better, scoring a lower latency; when given a direct, synchronous workload.

Ceph, and (to a lesser extent) etcd perform better on drives with power loss protection

The results from Ceph I feel are the shining star in all of this. In every test, with varying degrees of replication, OSDs, and workloads (Sequential and Random), the drives equipped with power loss protection scored significantly higher than the ones without. That's about as clear cut as it gets.

If you want to go ahead with deploying Ceph on non-plp drives, I would suggest a replica 1 setup, with either 4 or 5 OSDs for each drive. You ideally want to minimize the number of total writes to the drive for each 'user' write. Backwards logic, but the tests seem to show that you'll acheive higher performance when you can write less.

Anyway.

This is been one of the most difficult posts I've written in a long time. I'm currently staring at the clock, it's 1:15 AM on a Friday. Countless weekend have gone into this to give the level of detail I think this work deserves. I would love to get your feedback.

Hopefully this will guide your drive purchasing in the future if you're deploying Ceph and/or etcd.

Signing off,

Okami.

(Btw, the art is made by my soon-to-be wife. Thanks Aur <3 )